OpenAI unveiled its latest top-tier generative AI model, OpenAI launches GPT-4o, on Monday. They have named it GPT-4o, with the “o” denoting “omni,” which represents the model’s capability to manage text, speech, and video. They plan to introduce GPT-4o gradually in the company’s developer and consumer-facing products over the upcoming weeks.

Mira Murati, the CTO of OpenAI, stated that GPT-4o offers “GPT-4-level” intelligence but enhances GPT-4’s abilities across various modalities and media.

“GPT-4o integrates reasoning across voice, text, and vision,” Murati explained during a livestreamed presentation at OpenAI’s San Francisco office on Monday. “This is extremely crucial as we envision the future ways we will interact with machines.”

Previously, OpenAI described its GPT-4 Turbo model as the most advanced, developed using a combination of images and text to perform tasks like extracting text from images or describing their contents. However, OpenAI launches GPT-4o new component – speech.

GPT-4o significantly enhances the performance of OpenAI’s AI-driven chatbot, ChatGPT. Previously, the platform featured a voice mode that converted the chatbot’s responses using a text-to-speech model. Now, GPT-4o amplifies this by enabling more assistant-like interactions with ChatGPT.

For instance, users can now pose questions to the GPT-4o-powered ChatGPT and interrupt it mid-response. OpenAI mentions that the model now offers “real-time” responsiveness and can adapt to the nuances in a user’s voice to generate responses in various emotional styles, including singing.

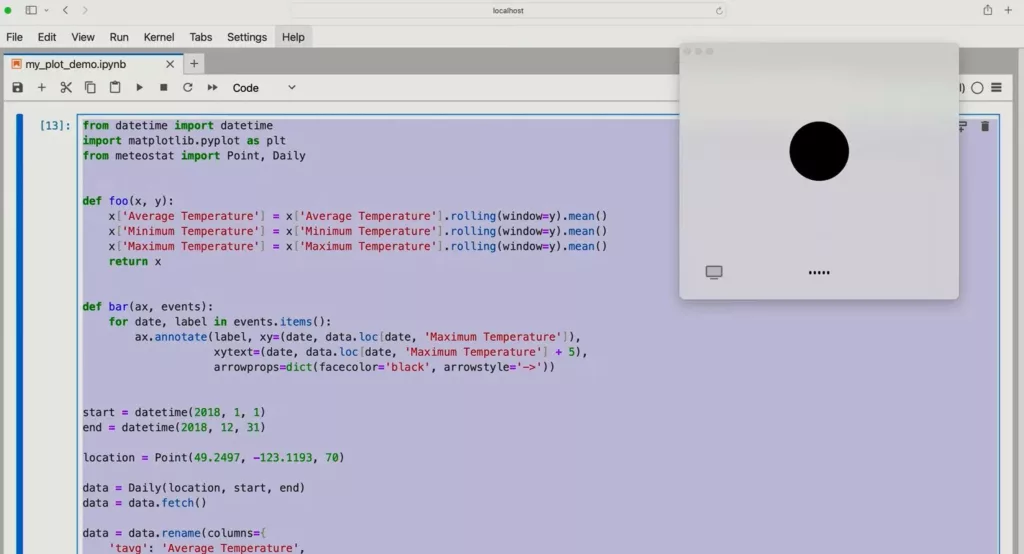

Moreover, GPT-4o has improved ChatGPT’s ability to handle vision tasks. When presented with a photo or a desktop screen, ChatGPT can now swiftly field questions related to the image, such as explaining software code visible in the image or identifying a brand visible on a shirt.

According to Murati, these functions will continue to develop. Presently, GPT-4o can interpret a menu in a foreign language from its image. In the future, it could enable ChatGPT to observe a live sports game and explain the ongoing plays and rules.

Murati emphasizes that as these models grow in complexity, the goal is to make user interactions more natural and seamless without focusing on the user interface but on the interaction itself with ChatGPT. “For the last few years, we have concentrated on enhancing these models’ intelligence… This marks the first significant stride towards improving their usability,” Murati added.

OpenAI reports that GPT-4o is now more multilingual, exhibiting enhanced performance in about 50 languages. In its API, GPT-4o operates twice as quickly, costs half as much, and can handle a higher number of requests compared to the previous top model, GPT-4 Turbo.

However, voice capabilities in the GPT-4o API will initially only be available to a limited number of trusted partners due to potential misuse concerns.

Starting today, OpenAI launches GPT-4o is accessible in the free tier of ChatGPT and for subscribers to OpenAI’s premium ChatGPT Plus and Team plans with a fivefold increase in message limits. Upon reaching these limits, ChatGPT will automatically revert to GPT-3.5, a less capable model. The enhanced ChatGPT voice experience powered by GPT-4o will roll out in an alpha version for Plus subscribers in the following month, along with options aimed at enterprises.

In other developments, OpenAI announced the launch of a refreshed ChatGPT UI on the web with a more conversational home screen and message layout, and a desktop version of ChatGPT for macOS, enabling users to query via a keyboard shortcut or manage and discuss screenshots. ChatGPT Plus users gain first access to the app starting today, while a Windows version is set to release later this year.

Additionally, the GPT Store, OpenAI launches GPT-4o library and creative tools for third-party chatbots built using its AI models, is now open for users of ChatGPT’s free tier. Free users can now access ChatGPT features previously behind a paywall, such as a memory feature that remembers user preferences for subsequent interactions, uploading files and photos, and searching the web for current answers.