In the realm of cognitive science, the journey from infancy to articulate communication is a puzzle that has captivated researchers for generations. Imagine the awe-inspiring task facing AI Infant Intelligence as they navigate from being mere sensory blobs to becoming mobile, rational, and communicative beings within just a few years.

Picture a baby, perhaps adorned in pink leggings and a tutu, surrounded by a myriad of toys and stuffed animals, reaching out to grasp a Lincoln Log as a caregiver patiently introduces them to the concept of a “log.”

This process of language acquisition has sparked intense debate among scientists. Some argue that much of it can be attributed to associative learning, akin to how dogs learn to associate the sound of a bell with the arrival of food.

Others propose that inherent features of the human mind shape our understanding of language, while still, others suggest that toddlers build their linguistic knowledge on the foundation laid by their understanding of other words.

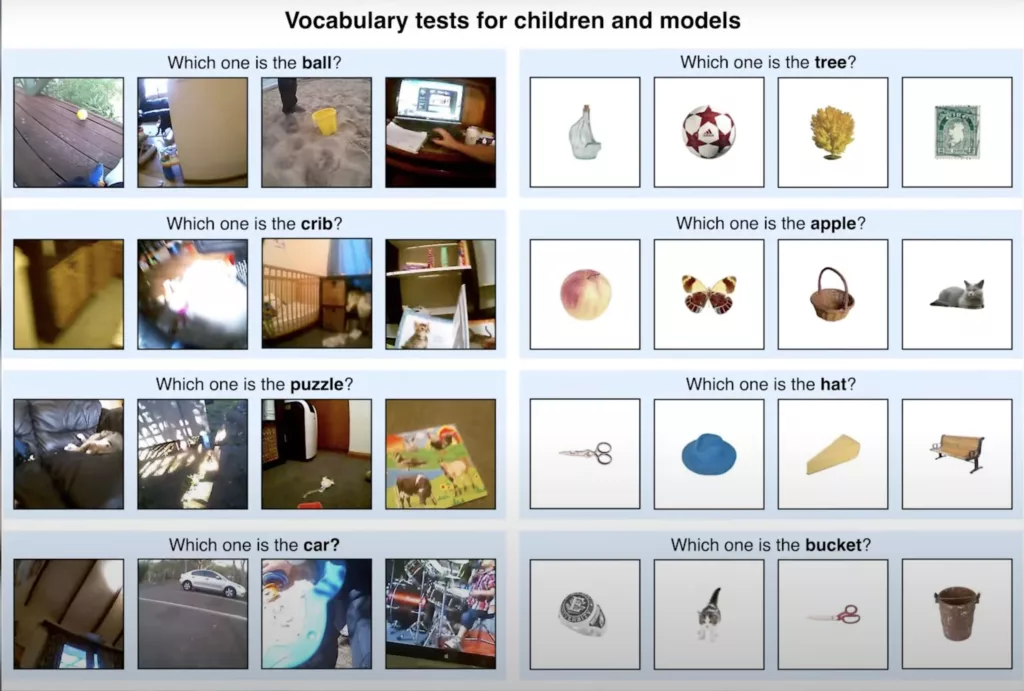

In this landscape of inquiry, researchers like Tammy Kwan and Brenden Lake from New York University have embarked on a pioneering endeavor, using innovative methods to delve into the intricacies of language acquisition.

Their focus? Their own twenty-one-month-old daughter, Luna. Equipped with a lightweight camera reminiscent of a GoPro, Luna becomes the protagonist in their study, her every interaction and utterance recorded from her unique perspective.

Dr. Lake’s ambitious goal is to leverage Luna’s sensory experiences to train a language model dubbed LunaBot a AI Infant Intelligence. By immersing the model in the same sensory input that Luna encounters, he hopes to bridge the gap between human and artificial intelligence, paving the way for a deeper understanding of both realms.

However, integrating artificial intelligence with the nuances of human cognition presents a myriad of challenges. While state-of-the-art language models like OpenAI’s GPT-4 and Google’s Gemini excel at processing vast amounts of data, they lack the nuanced understanding that arises from sensory experiences such as tasting food or feeling hunger. Attempts to encode a child’s sensory stream into algorithms inevitably fall short, capturing only a fraction of the rich tapestry of human phenomenology.

Moreover, humans aren’t passive receptacles of data like neural networks; our intentions, desires, and beliefs intricately shape our learning experiences. Linda Smith, a psychologist at Indiana University, emphasizes the critical role of intentionality in human cognition, a dimension often overlooked in AI models fixated solely on data processing.

Yet, despite these challenges, researchers like Dr. Lake remain undeterred in their quest to unravel the mysteries of human intelligence through AI.

By training models on children’s experiences, such as the groundbreaking AI model based on footage of a child’s life, they aim to illuminate the intricate mechanisms underlying language acquisition and conceptualization.

As technology continues to evolve, the convergence of human and artificial minds raises profound questions about the essence of humanity itself. By pushing the boundaries of AI’s capabilities and exploring its parallels with human cognition, researchers hope to gain profound insights into our own cognitive processes while propelling the field of artificial intelligence into new frontiers of understanding.

More News on Artificial Intelligence

For Dr. Lake and others like him, this intersection of AI Infant Intelligence and human cognition represents the frontier of scientific inquiry, offering boundless opportunities for discovery and insight into the nature of intelligence itself.